Yuntian Deng

Assistant Professor, UWaterlooAssociate, Harvard SEAS

Faculty Affiliate, Vector Institute

PhD in CS, Harvard

[CV] [Google Scholar] [GitHub] [X/Twitter] [LinkedIn] [Semantic Scholar]

My research focuses on understanding and improving how language models reason, with a particular emphasis on internalizing explicit reasoning processes into implicit computation. I also study real-world LLM usage at scale through large-scale conversation datasets and build open tools and demos that make our research accessible.

Our work on WildChat has been featured in the Washington Post and is used in OpenAI's o1 and Anthropic's Claude 3 for safety evaluation. Our open-source toolkit OpenNMT has been widely adopted in both industry and academia. I received my PhD from Harvard University, where I was advised by Prof. Alexander Rush and Prof. Stuart Shieber. I did a postdoc under the supervision of Prof. Yejin Choi.

Research Themes

Neural World Models

Revamping how machines interact with humans using generative AI instead of rigid menus and rules. We build neural models that simulate entire computing environments end-to-end.

NeuralOSProgram as Weights

A new programming paradigm that replaces symbolic code for fuzzy functions with compiled neural programs. Towards programming without code as an intermediary.

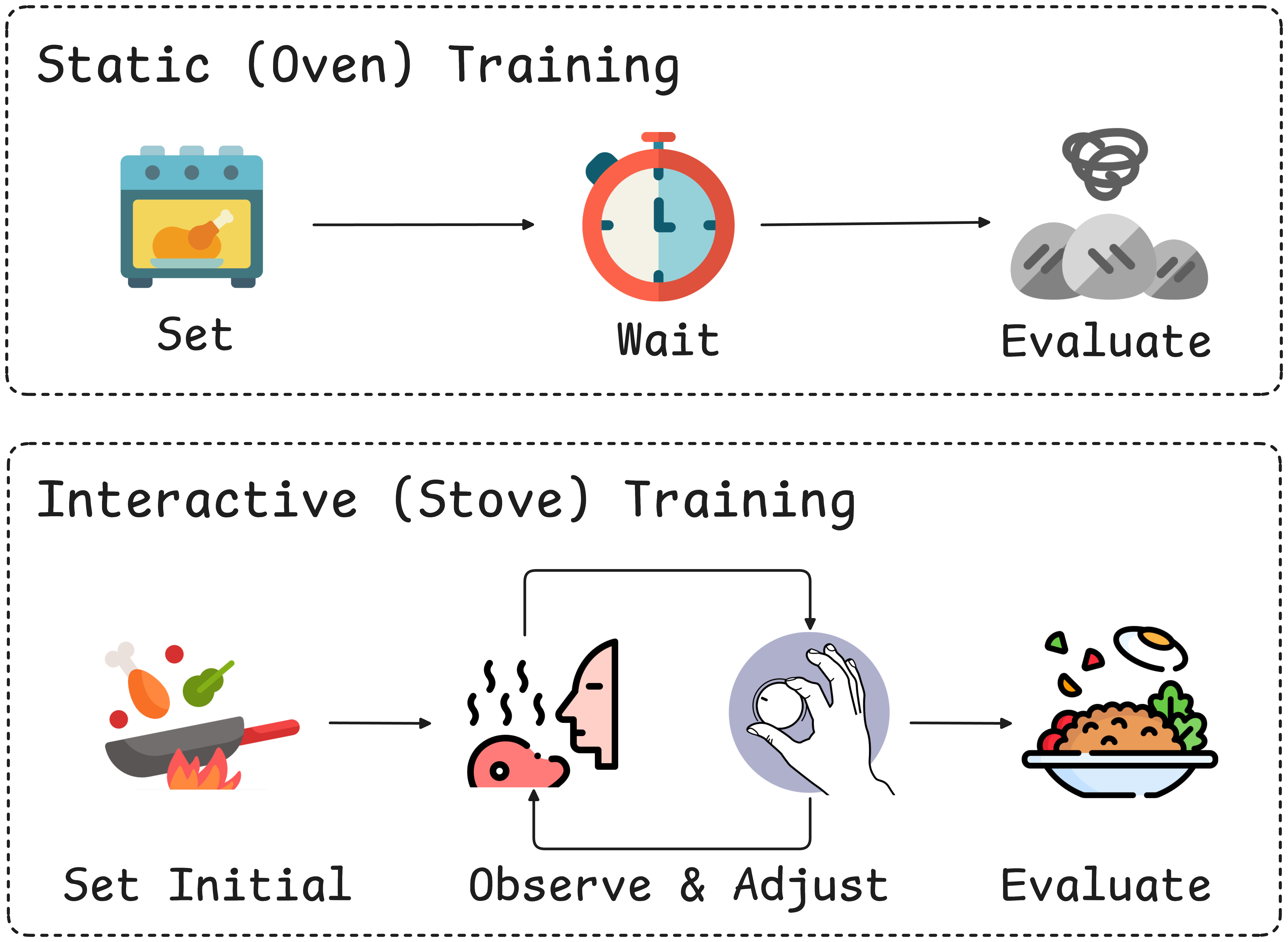

programasweights.comImplicit Reasoning

Can language models learn to reason without explicitly articulating every step? We develop methods to internalize chain-of-thought reasoning into a model's hidden computation.

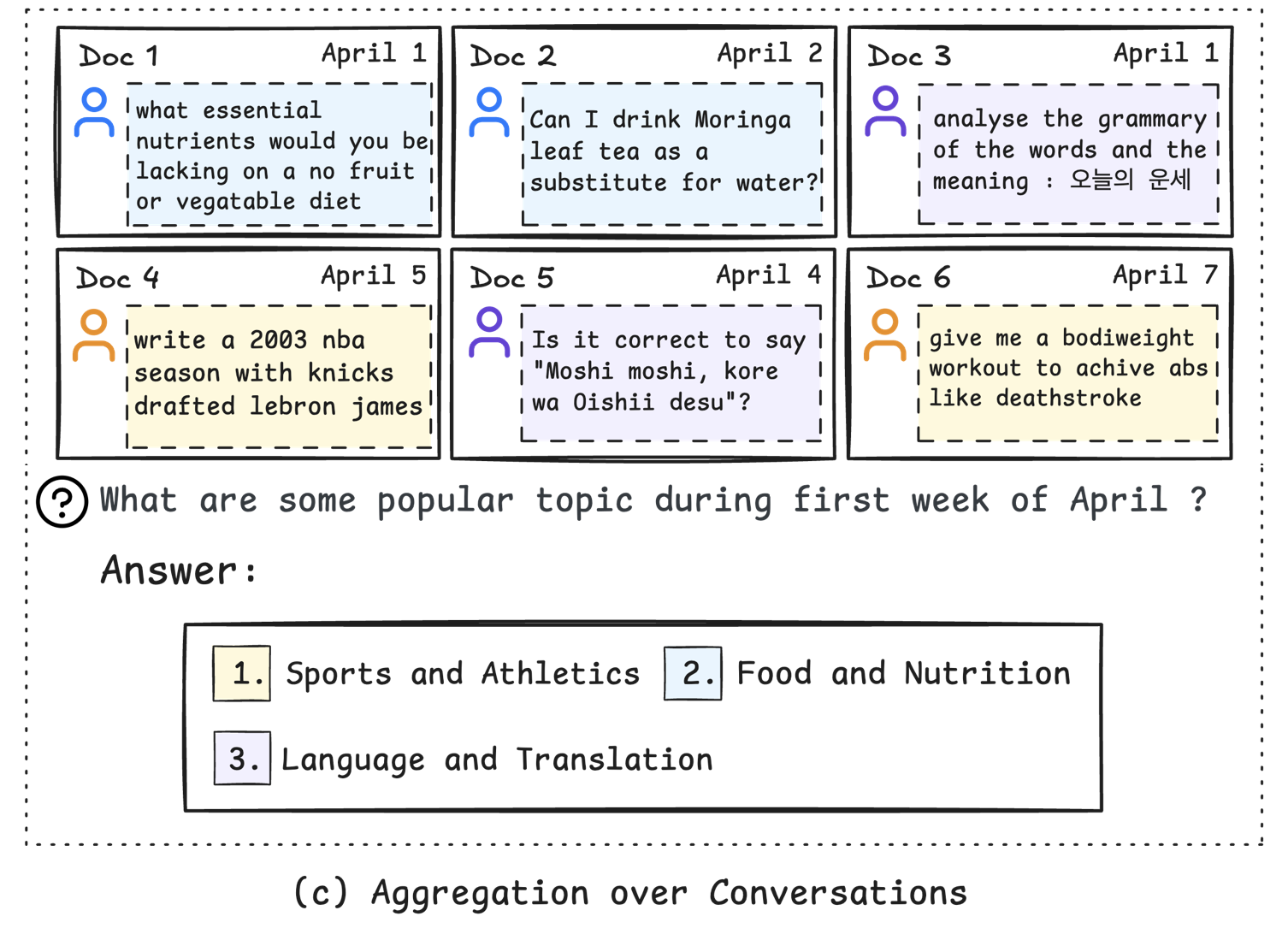

Internalize CoT · Implicit CoT · ICLR 2026 WorkshopLLM Usage at Scale

How do people actually use large language models? We collect and analyze millions of real-world conversations to understand usage patterns, safety, and emergent behaviors.

WildChat · WildVis · Aggregative QAIn the Media

News

- Dec 24, 2025: Organizing the Latent & Implicit Thinking - Going Beyond CoT Reasoning Workshop at ICLR 2026.

- Dec 19, 2025: My PhD student Wentao Zhang was selected as one of two University of Waterloo nominees advancing to the proposal round of the 2026 Qualcomm Innovation Fellowship.

- Dec 16, 2025: Became Co-Chief Technical Officer (Co-CTO) for ACL Rolling Review (ARR).

- Dec 5, 2025: Featured in a Canadian Press article about developing a tool to help courts spot AI-generated evidence.

- Aug 11, 2025: Released WildChat-4.8M, a dataset of 4.8M real user-ChatGPT conversations collected from our public chatbots (o1-preview and o1-mini).

- Jul 14, 2025: We built a demo, NeuralOS, an operating system entirely powered by neural networks. Check out our paper for more details!

- Nov 30, 2024: Featured in an NZZ article about WildChat.

- Nov 20, 2024: Gave a talk at NYU CILVR on implicit chain of thought reasoning.

- Oct 2, 2024: Interviewed by TechCrunch to discuss the challenges of using LLMs to solve basic math problems.

- Aug 4, 2024: WildChat was featured in the Washington Post! This work collected 1M real-world user-ChatGPT conversations. You can explore all data at wildvisualizer.com, or try our data-collecting chatbot at huggingface.co/spaces/yuntian-deng/ChatGPT4.

- Jul 19, 2024: I built a demo using GPT-2 to directly produce the product of two numbers without chain-of-thought (CoT). CoT is internalized using the approach in From Explicit CoT to Implicit CoT: Learning to Internalize CoT Step by Step. A 12-layer GPT-2 can solve 20-digit multiplication with 99.5% accuracy!

- Jul 11, 2024: I built a demo to solve grade school math problems (GSM8K) without chain-of-thought (CoT) at 52% accuracy. CoT is internalized using the approach in From Explicit CoT to Implicit CoT: Learning to Internalize CoT Step by Step.

- Jun 19, 2024: I built a website, wildvisualizer.com, for interactive search of WildChat, allowing keyword, toxicity, IP, language, and country-based searches of 1M WildChat conversations.

- May 29, 2024: Our paper, From Explicit CoT to Implicit CoT: Learning to Internalize CoT Step by Step, is now publicly available! This paper proposes a simple yet effective method to teach language models to internalize chain-of-thought reasoning by gradually removing intermediate steps and finetuning.

- Apr 26, 2024: I built a demo, AKSelectionPredictor, to predict whether a paper will be selected by @_akhaliq into Hugging Face papers based on its title, abstract, and authors.

- Mar 5, 2024: Our dataset, WildChat, is used in Anthropic's Claude 3 for evaluating refusals.

- Nov 14, 2023: Our dataset, WildChat, is now publicly available! It is a corpus of 650K real-world user-ChatGPT interactions, characterized by over 60 languages and a diversity of user prompts.

- Nov 7, 2023: Our paper, Implicit Chain of Thought Reasoning via Knowledge Distillation, is now publicly available! This paper trains LMs that can reason internally using hidden states instead of articulating all reasoning steps like humans.

- Mar 29, 2023: OpenAIWatch.com is launched! It tracks GPT-4's nondeterministic behavior even with greedy decoding in unicorn illustrations. 🦄

- Mar 29, 2023: Our GPT Chatbot, based on Yuvraj Sharma's code, is now live! It provides free access to GPT with the aim of collecting dialogue data for research purposes.

- Oct 18, 2022: Our paper, Model Criticism for Long-Form Text Generation, is now publicly available! This paper uses model criticism in latent space to quantify various notions of high-level coherence in long-form text generation.

- Oct 12, 2022: Markup-to-Image Diffusion Models demo is now live! This project uses a diffusion model to learn how to render various types of markups, including LaTeX.

- Jun 2, 2020: Our paper, Cascaded Text Generation with Markov Transformers, is available! It allows parallel, fast, autoregressive, and accurate text generation using a high-order Markov model.

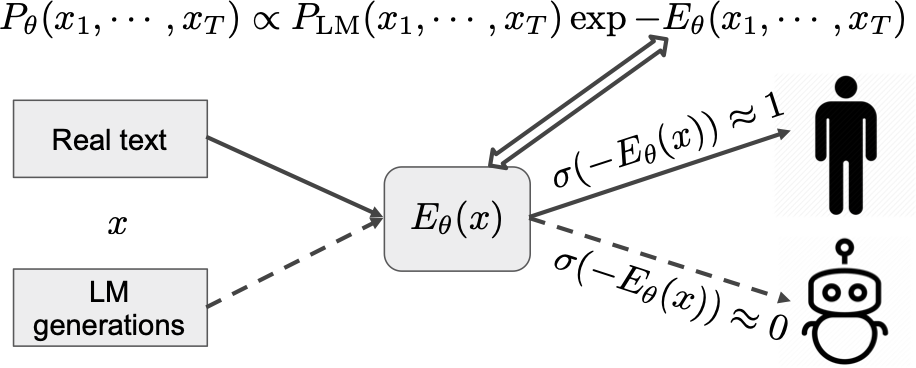

- Apr 26, 2020: Introducing Residual Energy-Based Models for Text Generation, a globally-normalized approach to text generation! Our approach uses a global discriminator to guide the traditional locally-normalized language model to produce text that's more indistinguishable from human-written text.

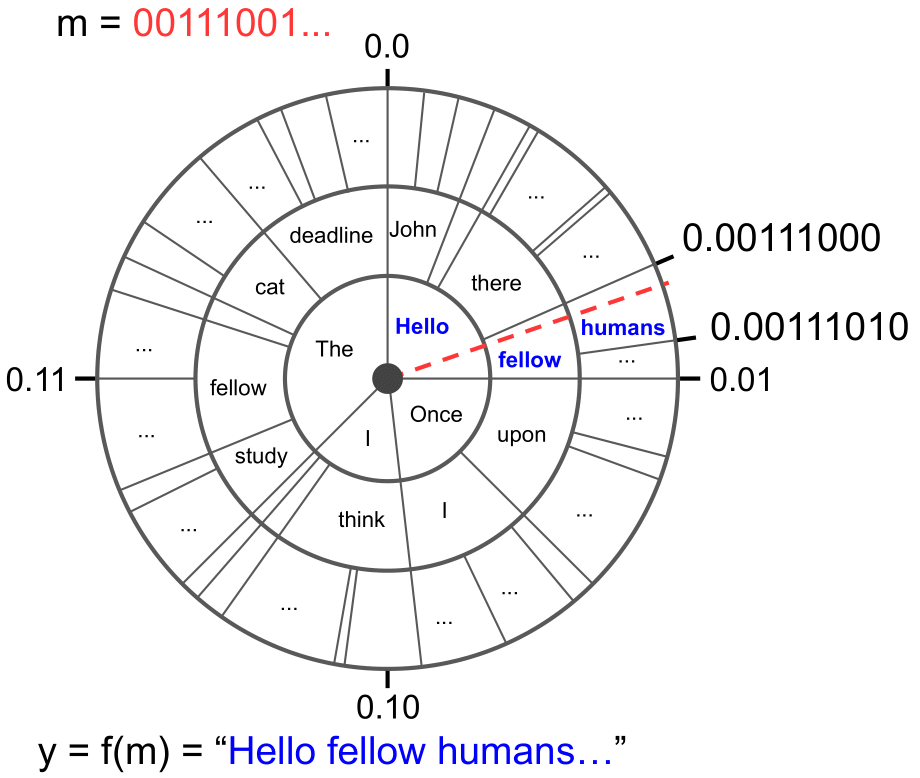

- Sep 5, 2019: Neural Linguistic Steganography demo is now live! This project lets you hide secret messages in natural language using arithmetic coding.

- Dec 19, 2016: Excited to introduce OpenNMT, an open-source neural machine translation toolkit developed for industrial and academic use.

- Sep 19, 2016: Excited to announce that we've provided a solution to OpenAI's requests-for-research im2latex challenge using neural sequence-to-sequence learning! Check out the visualizations here.

Current Research

Luke Rivard, Sun Sun, Hongyu Guo, Wenhu Chen, Yuntian Deng.

ICLR 2026

Wentao Zhang*, Liliana Hotsko*, Woojeong Kim*, Pengyu Nie, Stuart Shieber, Yuntian Deng.

Coming soon

Yuntian Deng, Yejin Choi, Stuart Shieber.

arXiv 2024

Yuntian Deng, Kiran Prasad, Roland Fernandez, Paul Smolensky, Vishrav Chaudhary, Stuart Shieber.

arXiv 2023

Wenting Zhao, Xiang Ren, Jack Hessel, Claire Cardie, Yejin Choi, Yuntian Deng.

ICLR 2024 (Spotlight)

Featured in the Washington Post

Used in OpenAI's o1 for safety evaluation

Used in Anthropic's Claude 3 for evaluating refusals

Other Selected Works

For all my papers, visit the publications page or my Google Scholar.

Wentao Zhang, Woojeong Kim, Yuntian Deng.

EMNLP 2025 (Oral)

Wentao Zhang, Yang Young Lu, Yuntian Deng.

EMNLP 2025 Demo

Yuntian Deng, Wenting Zhao, Jack Hessel, Xiang Ren, Claire Cardie, Yejin Choi.

EMNLP 2024 Demo

John Xavier Morris*, Chandan Singh*, Alexander M. Rush, Jianfeng Gao, Yuntian Deng.

EMNLP 2023

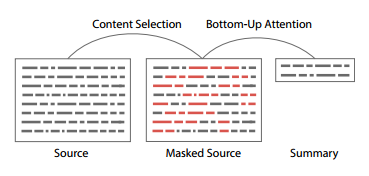

Yuntian Deng, Noriyuki Kojima, Alexander M. Rush.

ICLR 2023

Yuntian Deng, Volodymyr Kuleshov, Alexander M Rush.

EMNLP 2022

Yuntian Deng, Anton Bakhtin, Myle Ott, Arthur Szlam, Marc'Aurelio Ranzato.

ICLR 2020

Referenced by Meta's Llama 2

Referenced by the diffusion paper (DDPM)

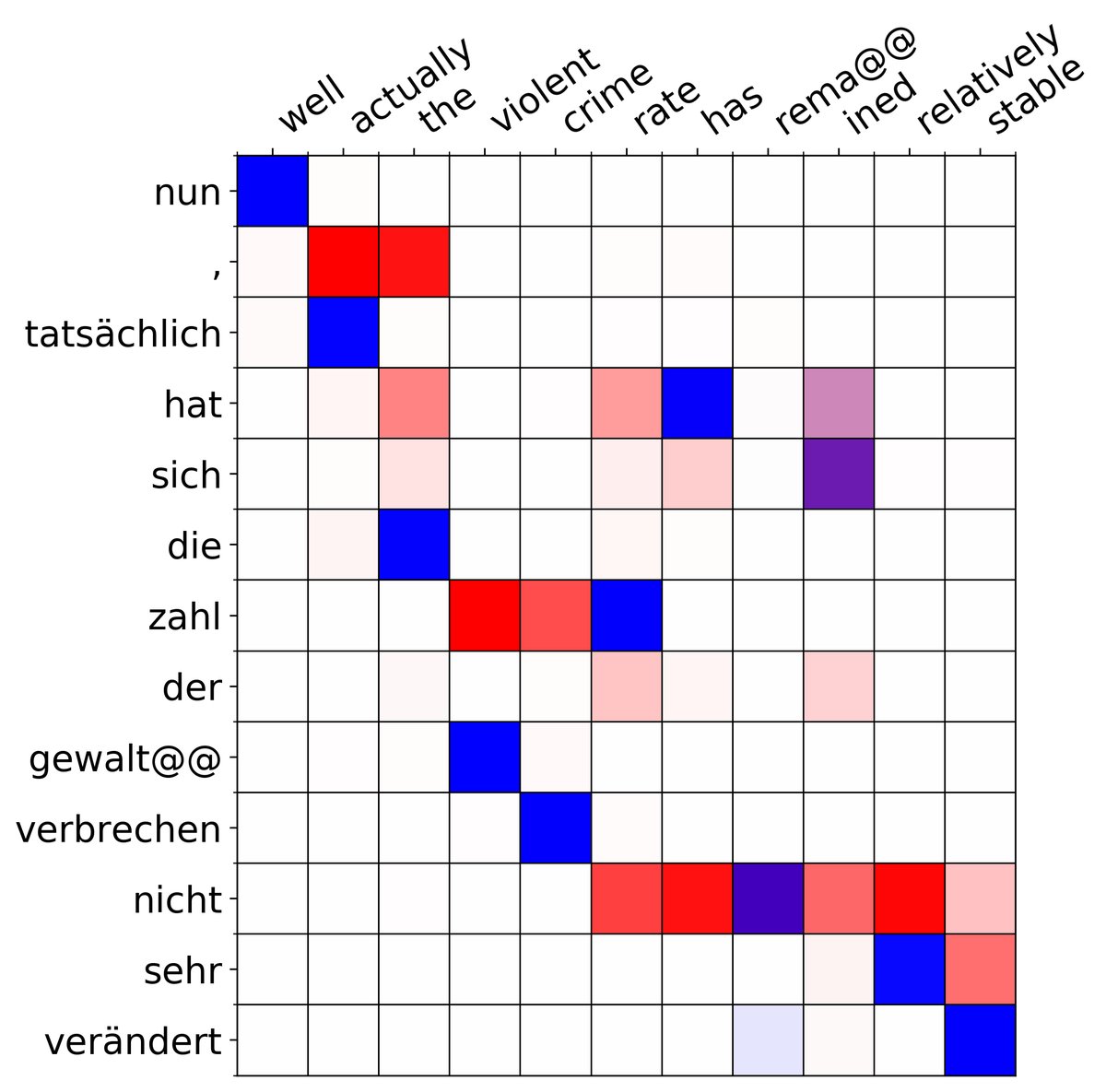

Yuntian Deng*, Yoon Kim*, Justin Chiu, Demi Guo, Alexander M. Rush.

NeurIPS 2018

Yuntian Deng, Anssi Kanervisto, Jeffrey Ling, and Alexander M. Rush.

ICML 2017

Guillaume Klein, Yoon Kim, Yuntian Deng, Jean Senellart, Alexander M. Rush.

ACL 2017 Demo (Best Demo Runner-up)

Service

- Co-Chief Technical Officer, ACL Rolling Review (ARR), 2025–present

- Workshop Organizer, Latent & Implicit Thinking Workshop, ICLR 2026

- Mentorship Chair, ICLR 2026

Prospective Students

I am not actively recruiting new students at this time. If you are genuinely interested in my research, I encourage you to read my recent papers and reach out with specific questions about the work. Due to high volume, I am unable to respond to generic inquiries.